Notes from Sage’s 2023 Critical Thinking & Artificial Intelligence Boot Camp

Today, I’m participating in the Sage’s 2023 Critical Thinking (CT) & Artificial Intelligence (AI) Boot Camp. My notes and thoughts brought to you live. The presentation is being recorded and will be posted soon on the Boot Camp’s page on the Sage website.

Keynote Dr. Leo Lo

First up is Dr. Leo Lo – providing an engaging keynote about the roles of librarians in the conversation about AI and CT on campus, balancing enthusiasm and caution around the uncertainty as the field grows. The goal is to position librarians as the place on campus to bring faculty and students together, with an eye on employability. Focus on empowerment & try different things.

Critical skills include:

- Analytical thinking & prompt engineering

- AI literacy, notably around capabilities & limits of AI

- Ethical reasoning around core values & principles

- Continuous learning

Roles of librarians:

- Resource curators

- AI Advocates

- Libraries as campus collaborative hubs (spaces, devise & promote best practices)

- Ethics discussion leaders

Recent paper:

Lo, L. S. (2023). The CLEAR path: A framework for enhancing information literacy through prompt engineering. Journal of Academic Librarianship, 49(4), [102720]. https://doi.org/10.1016/j.acalib.2023.102720

Source: Sage 2023 Boot Camp

First Panel – Critical Thinking

J. Michael Spector highlights the importance of John Dewey’s How we think (1910, 2011) in learning by experience, especially at the onset on a student’s career – in middle school.

Madeleine Mejia offers a powerful analysis of using technology in CT, leveraging many thinkers such as Facione (1990). See her recent article:

Mejia, M., & Sargent, J. M. (2023). Leveraging Technology to Develop Students’ Critical Thinking Skills. Journal of Educational Technology Systems, 51(4), 393–418. https://doi.org/10.1177/00472395231166613

Source: Sage 2023 Boot Camp

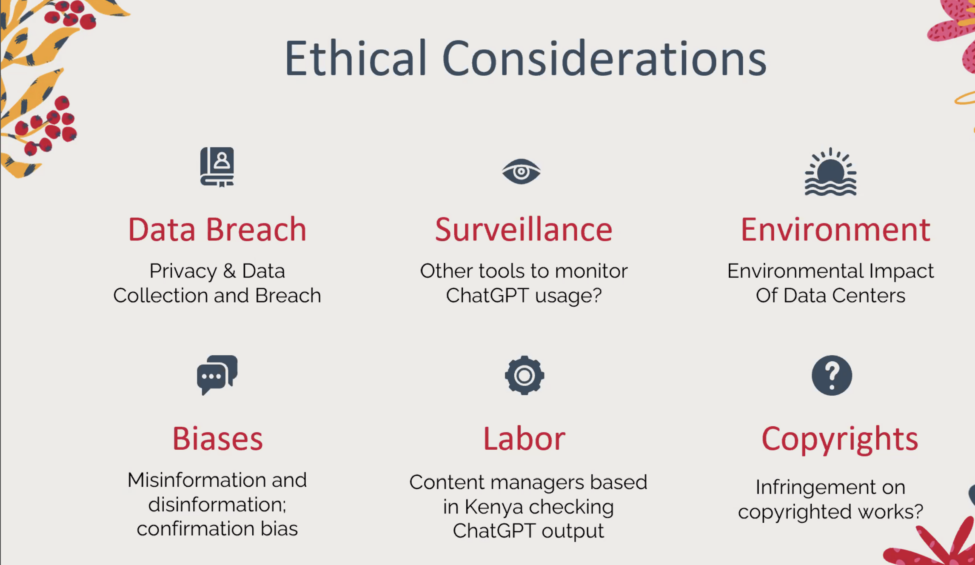

Raymond Pun proposes many concrete ideas for curating a learning experience around CT & AI. Here is a good list of ethical issues to consider with AI by Raymond Pun:

2nd Panel – mis/dis-information

Altay, S., Berriche, M., & Acerbi, A. (2023). Misinformation on Misinformation: Conceptual and Methodological Challenges. Social Media + Society, 9(1). https://doi.org/10.1177/20563051221150412

Source: Sage 2023 Boot Camp chat

Dan Chibnall, STEM Librarian, Drake University: fact checking is a proactive approach, not reactive. Truth, noise – ChatGPT will exacerbate the problem. Beware of offloading CT and learning to these tools. Cognitive biases and confirmation bias… and the loss of discovery (auto pilot of letting the tools doing the work).

Web Literacy for Student Fact-Checkers, Mike Caulfield, Washington State University Vancouver, 2019, https://open.umn.edu/opentextbooks/textbooks/454

Source: Open Textbook Library

Richard Wood, associate professor of practice at the Norton School of Human Ecology, University of Arizona. Critical thinking requires a lot of energy, your brain is mobilized in ways many find uncomfortable. The ladder of abstraction (deconstruct statements), enthymeme (Aristote), evidence to support premises: how to approach claims. Science does not “prove” it provides insight and evidence toward a consensus.

Brooklyne Gipson, assistant professor of communication at the University of Illinois, Urbana-Champaign. Teaches race and gender issues. Alternate epistemologies, mindfulness of this space. Acknowledge that differences may be socialized from one’s past and are a key component of identity. LLMs and GPTs simply regurgitate variations of what is said, no fact checking. Engaged pedagogy. Rooted in social media space, acknowledge media literacy and bias as a shifting dimension.

Richard Rosen, retired professor of practice and chair of the Personal and Family Financial Planning program at the University of Arizona. Bill Gates: AI is probably the biggest development in computing since the personal computer. Endemic cheating. Early 1980s: calculators enter colleges. Do AI make up facts? Are AI and search engines the same? Lawyer in Texas using ChatGPT to look for case law & hallucinations. Use but verify. Facts vs opinions. Find the source.

3rd Panel – what students want from AI and what they want you to know

Sarah Morris, librarian & PhD student. Finish an assignment asap. Understanding AI: opportunities, challenges, limitations. Points of interest: AI literacy; possibilities/limitations; Policy issues; algorithmic literacy = dealing with assumptions and identifying knowledge gaps. Job prospects; lifelong learning; ways to connect to lived experiences of students.

Brady Beard, reference and instruction librarian at Pitts Theology Library, Candler School of Theology, Emory University. Humans in the loop of the information landscape. Looking for hallucinated citations and sources. Generative AI is not absolutely novel in many ways considering recent developments. Librarians are non-evaluative contributors to the learning experience, it is easier to be truthful about one’s approaches to their work. Reframe conversations about plagiarism and academic integrity: this is not the way forward as these tools have great promise for the future. Adjust our assessments (e.g.: oral examination in a Zoom call). These systems are not magic… using the term “hallucination” places agency in algorithms that they don’t have. What are the costs of these systems and tools.

I am sorry to miss the end of this Boot Camp as I have another commitment. Apologies to Hannah Pearson, fiction writer and Anne Lester, graduate student, for missing their presentations.

Ce contenu a été mis à jour le 2023-08-08 à 1:50 pm.